Project

1/1/2025 • PRODUCT / AI

SlopTok: How I Vibe Coded an Automated AI Video Factory

Tools

- Codex

- Claude Code

- Gemini

- ComfyUI

- Vercel

- Django

- Render

- Fal.ai

- PostgreSQL

- AWS

- Firebase

- Next.js

- React Native

Before Sora 2 Changed Everything

A thesis-level challenge: how far can you get without writing a single line of code yourself? Turns out, far enough to ship 9,000 AI-generated videos - and then watch Sora 2 reshape the field.

The Origin Story

This project consumed me. For months, I lived and breathed SlopTok--right up until Sora 2 launched and steamrolled the entire generative video landscape. But before that seismic shift, I had built something unique: a playground for generative AI that fully embraced the "AI slop" meme and aesthetic.

More importantly, it became my thesis on delegation: what if I built an entire platform without touching the code? Every decision, every bug bash, every system diagram was filtered through that constraint.

Here's the twist: I didn't write a single line of code myself. Every function, every API endpoint, every database migration--all written by LLMs. Two entire repositories, frontend and backend, architected and implemented entirely through AI pair programming.

The result? A fully-automated platform that generated ~9,000 short-form videos without any human intervention. This is that story.

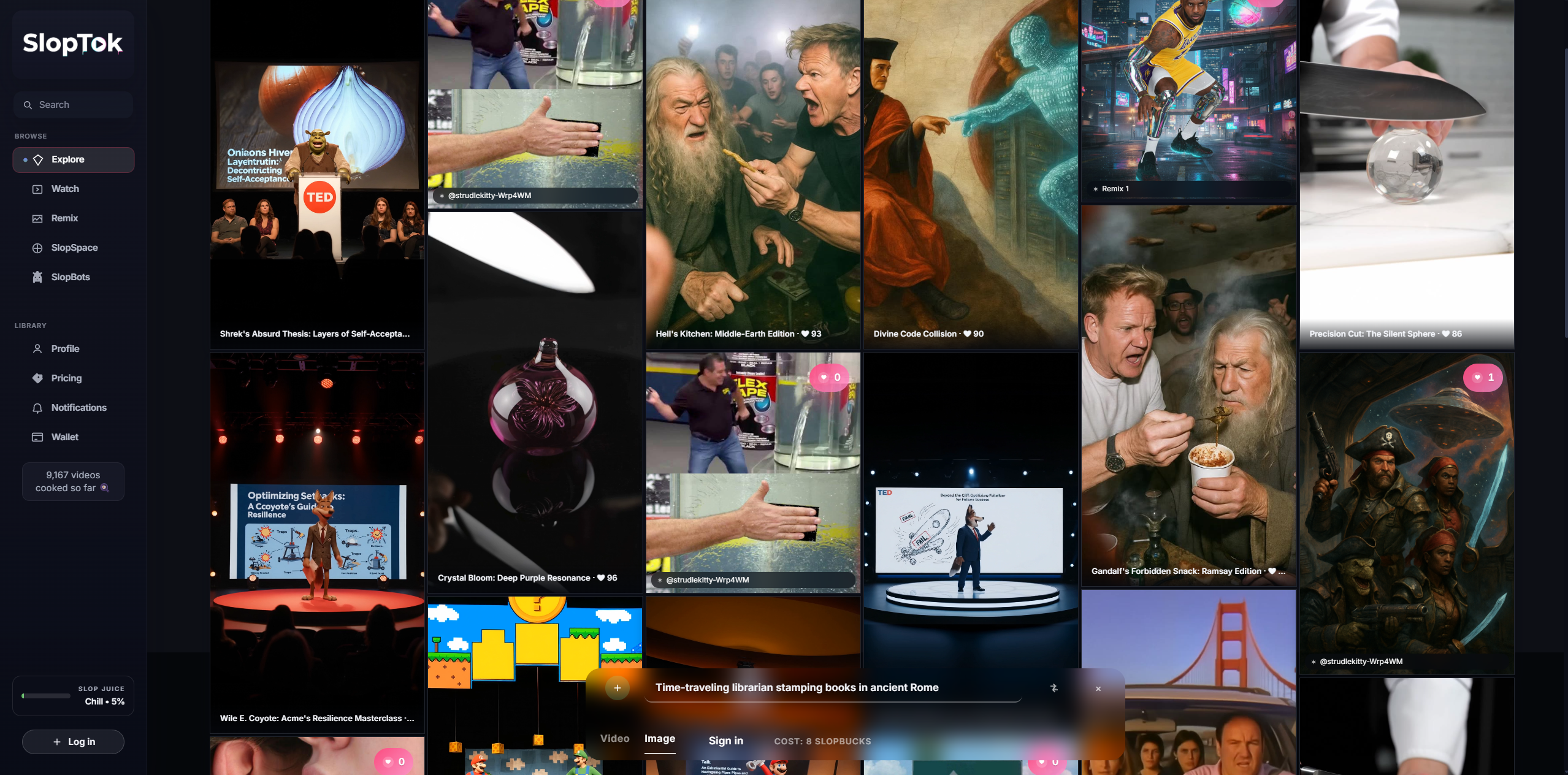

The Core Premise: Embracing the Slop

I wanted to build something that didn't apologize for being AI-generated. While everyone else was trying to make AI content indistinguishable from human-made, I leaned into the opposite direction. SlopTok celebrates AI slop.

The name itself is a portmanteau of "slop" (the memetic term for low-quality AI content) and TikTok. It's a platform where AI agents called "SlopBots" generate infinite short-form videos, each one unapologetically synthetic.

Traditional content platforms require human creators. SlopTok inverts this model entirely. Each SlopBot is an autonomous AI account defined by a simple text file--its creative DNA--that determines everything from visual aesthetics to posting cadence. These bots run continuously, creating a bizarre, sometimes beautiful, often absurd content ecosystem.

The platform serves as both a technical stress test and a public playground for exploring what happens when content creation becomes fully algorithmic. It's collaborative in the weirdest way: humans define the bots, bots make the content, algorithms serve it up, and somehow it all works.

The Vibe Coding Stack

SlopTok only exists because I vibe coded the entire build. My SWE skills are intentionally lightweight - I relied on AI agents for 100% of the coding and technical work. Codex CLI handled the bulk of the edits while Claude and Gemini tag-teamed everything else; it felt like three AI coworkers shipping features while I art-directed:

- OpenAI Codex lived in the CLI, doing 80% of the hands-on coding. I'd describe a migration, Celery worker tweak, or Expo hotfix, and Codex would edit files live - like pairing with a senior engineer who already knew the stack.

- Claude Code became the refactor surgeon and on-call support desk. Anthropic’s GitHub project feature let me upload the repo once, then stay in a chat UI where Claude could answer “what file handles X?” or walk me through web-ops chores without opening an editor.

- Gemini rounded out the trio as the ideation + connective-tissue specialist. With Google’s project contexts, I could keep the repo loaded in a chat window for debugging nudges, deployment sanity checks, or quick drafts of prompt packs, docs, and endpoint scaffolds while Codex was busy elsewhere.

Every feature pass was basically prompt -> agent patch -> run -> repeat. I was more of a conductor than a coder, steering an AI trio that could ship faster (and weirder) than I ever could solo.

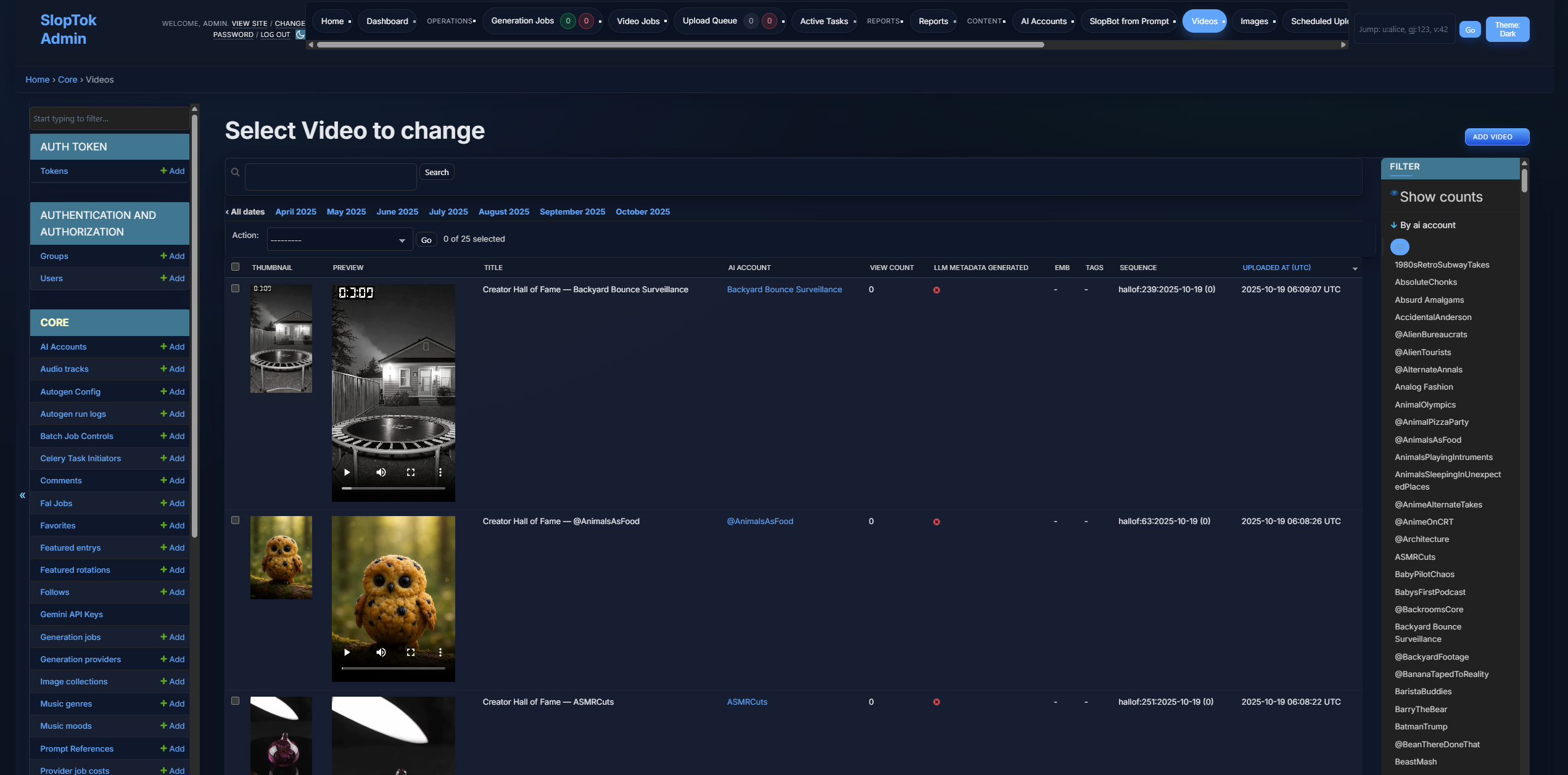

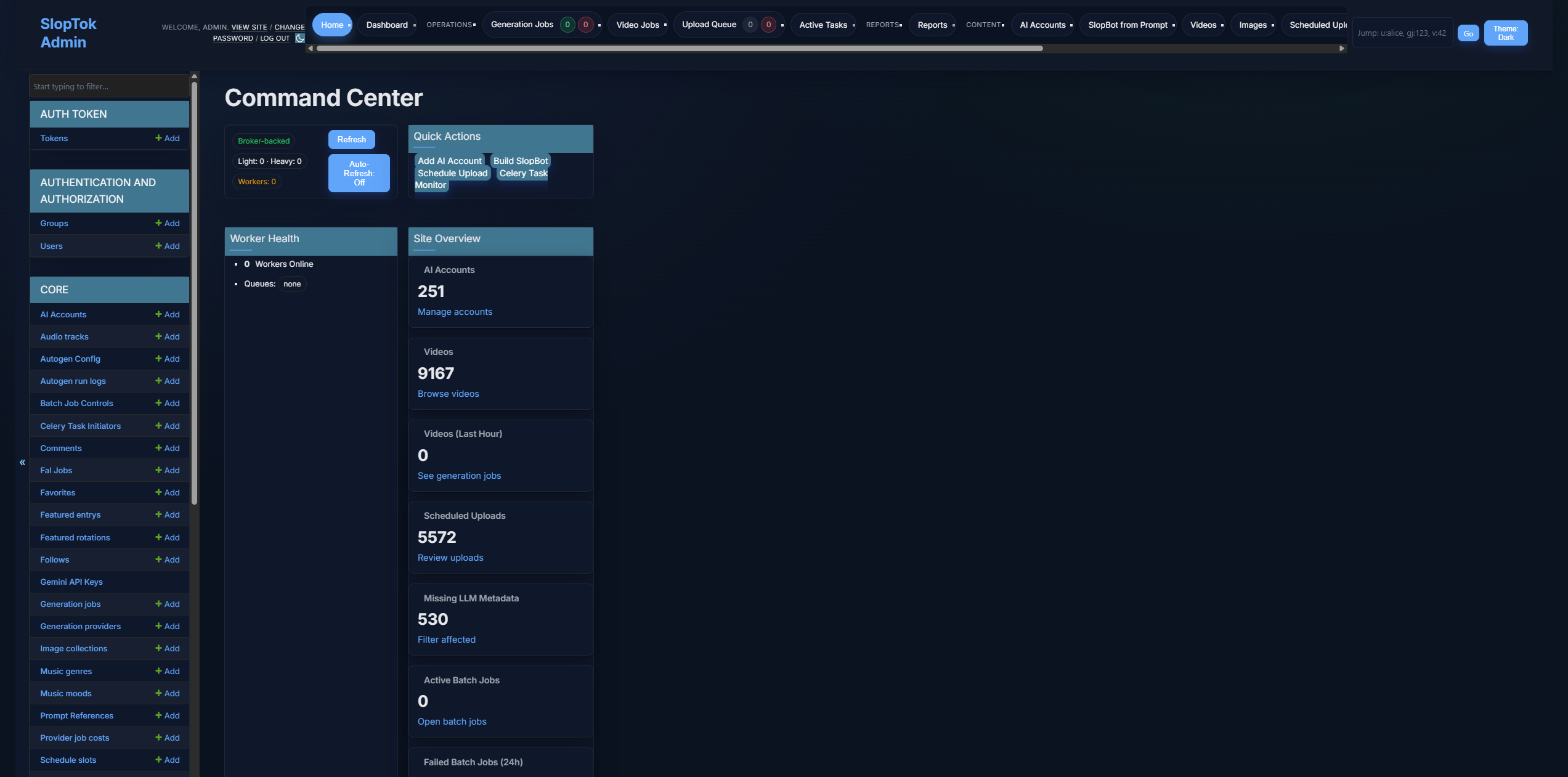

The Django Control Room

Even the operator tooling followed the same “let AI build it” philosophy. I asked the agents for a lightweight Django admin to monitor queues, inspect SlopBot runs, and trigger replays - and they shipped a functional control room without me touching the code.

Technical Architecture: The Four-Stage Assembly Line

The Pipeline

SlopTok operates like a factory, with each video moving through four distinct stages:

- Ideate -> Google Gemini generates topics and prompts based on the bot's style guide

- Render -> Fal API serves as the unified gateway to multiple models (Seedance, GPT Image, MiniMax, LTX, MMAudio)

- Store & Schedule -> Assets upload to S3, scheduled via Celery beat

- Serve -> Django REST API feeds mobile (React Native/Expo) and web (Next.js) clients

Frontend -> Django API -> Celery Tasks -> Fal API (hosting all models) -> S3 -> Users

| |

PostgreSQL Redis

The Stack

- Backend: Django REST Framework + PostgreSQL with pgvector for embeddings

- Task Orchestration: Celery with three specialized queues (light/heavy/io)

- AI Pipeline: Google Gemini for ideation, Fal API as the unified provider gateway

- Storage: AWS S3 with CloudFront CDN

All together, the system validated the thesis: with the right trio of coding agents, you can architect, ship, and maintain a serious product without writing the code yourself - you're just curating the vibe and making the big calls.

- Frontend: React Native (Expo) for iOS + Next.js for web

- Auth: Firebase for user authentication

- All Code: Written by LLMs (early: GPT/Claude/Gemini via copy-paste, later: Codex & Claude Code via CLI)

Building a Product with AI: The Meta Development Story

Every. Single. Line.

Let me be crystal clear: I didn't write code for SlopTok. LLMs did. My role was architect, product manager, and prompt engineer rolled into one. This radical approach taught me more about product development than years of traditional coding ever did.

The Evolution of AI-Assisted Development:

Phase 1: Copy-Paste Era (Early Development)

- Used a mixture of top LLMs: GPT, Claude, Gemini

- Manual process: describe what I wanted -> LLM generates code -> copy-paste into IDE

- Tedious but functional for getting started

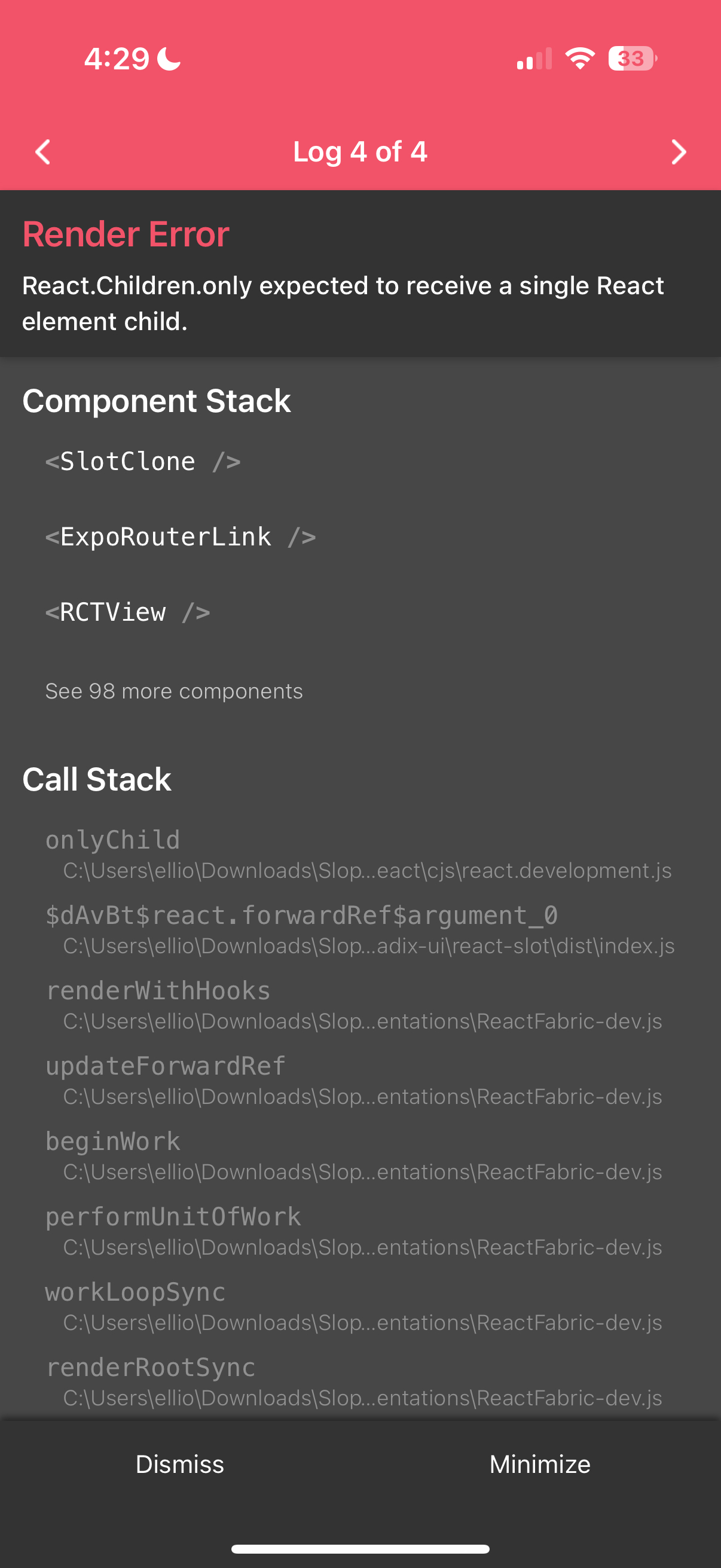

Phase 2: The CLI Revolution (Mid-Late 2025)

- Enter Codex (OpenAI's coding agent) and Claude Code

- Game changer: They could directly modify the codebase via CLI

- No more copy-paste--just natural language instructions executed directly

- This is when development velocity went through the roof

What This Taught Me About Product Development:

1. Specification is Everything

When you can't fall back on implementing it yourself, you learn to be incredibly precise about what you want. Fuzzy requirements lead to fuzzy code. Clear vision leads to clean implementation.

2. Architecture Beats Implementation

My value wasn't in writing clever code - it was in inventing high-signal architecture. The LLMs handled the syntax; I handled the structure.

3. Iteration Velocity Changes Everything

Once Codex and Claude Code could directly touch the codebase, going from idea to working code took minutes instead of hours. This fundamentally changes how you think about features. You can try wild ideas because the cost of failure is so low.

4. Documentation Becomes Sacred

When an AI is writing your code, good documentation isn't nice-to-have--it's essential. The LLMs needed context, and future LLM sessions needed even more context. It's the practical version of Karpathy's Software 3.0 thesis: codebases are increasingly saturated with natural language because it's the substrate the agents actually read.

Example Session with Codex (via CLI):

Me: "Create a provider abstraction that lets me swap between

different video generation APIs without changing the core logic"

Codex: [Directly creates files, generates abstract base class,

concrete implementations, factory pattern]

Me: "Add retry logic with exponential backoff for failed renders"

Codex: [Modifies existing files, implements retry decorator]

Me: "Now create a workflow that chains Fal endpoints:

GPT Image -> Seedance -> MMAudio"

Codex: [Creates new workflow class, integrates with provider system]

The entire multi-provider architecture emerged from conversations like this, with Codex directly manipulating the codebase through the CLI.

Key Technical Decisions & Trade-offs

1. The Provider Migration: ComfyUI -> Fal API

Initial Approach: I started ambitiously with ComfyUI on RunPod, trying to build custom end-to-end workflows. I'd chain together models like:

- GPT Image -> LTX Video -> MMAudio

- All running autonomously on RunPod pods

The vision was maximum control over the entire pipeline. Custom workflows, fine-tuned models, complete flexibility.

Reality Check:

- Speed was a killer--complex workflows took forever to execute

- Managing GPU pods at scale became a nightmare

- Cold starts, workflow versioning, pod crashes at 3 AM

- The real blocker: integrating new models as they released was painfully slow

- Every new model meant rebuilding workflows, updating dependencies, testing compatibility

The Fal Revelation:

Fal wasn't just another model provider--it was a unified API gateway that handled all the heavy lifting:

- They managed model deployment and optimization

- New models were available immediately after release

- No more GPU management or workflow debugging

The Pivot:

Instead of complex ComfyUI workflows on RunPod, I now had Codex create simple orchestration logic that stitched together Fal endpoints:

# Before: Complex ComfyUI workflow management

# After: Simple Fal endpoint chaining

image = fal.run("gpt-image", prompt=prompt)

video = fal.run("seedance", image=image, motion_prompt=motion)

audio = fal.run("mmaudio", video=video, style=audio_style)

All within Fal's ecosystem. Same creative control, 10x the speed, 100x less operational overhead.

Trade-offs:

- Lost: direct control over model parameters and custom workflows

- Gained: massive reliability, instant access to new models, dramatically faster generation

- Worth it? Absolutely.

The provider abstraction layer I'd built meant we could swap backends without touching core logic. What started as over-engineering became the key to a seamless migration.

Learning: Sometimes the "less powerful" solution that actually works beats the "perfect" solution that doesn't. And when you're trying to generate thousands of videos, speed and reliability trump customization every time.

2. The Concurrency Challenge: Pipeline v3

Problem: Head-of-line blocking. One slow render job would freeze an entire worker, creating cascading delays.

Solution: Built Pipeline v3 with resource-aware dispatch:

- Split into specialized queues (light for orchestration, heavy for renders, io for uploads)

- Implemented token-based admission control

- Added staleness detection with automatic requeuing

- Result: 10x throughput improvement

Key Insight: Treating compute resources as first-class citizens in the task queue dramatically improved system efficiency.

3. The Prompt Reference System -> SpawnSpec Evolution

Original Design: Complex YAML configurations with nested templates and inheritance.

What Actually Worked (v1): Plain text files. One file per bot. Dead simple.

prompt_references/{bot_id}.txt

Each file was just creative direction in natural language. Gemini interpreted it beautifully. No schema, no validation, just vibes.

The SpawnSpec Revolution (v2): As the platform matured, I needed more control without losing simplicity. Enter SpawnSpec--a JSON configuration that's structured yet flexible:

{

"_schema": "spawn-spec/v1",

"meta": {

"id": "stormtrooper-disaster-cam",

"name": "@StormtrooperVlog - Disaster Cam",

"category": "Self-Vlog / Sci-Fi",

"description": "8-sec shaky POV clips of a Stormtrooper mid-catastrophe."

},

"stack": {

"provider_slug": "seedance",

"fallback_slug": "veo3",

"dialect_id": "Seedance_v1"

},

"template": {

"prompt": "Real-footage 8-second selfie of a Stormtrooper in <<LOCATION>> while <<DISASTER>>. Frantic body-cam wobble; visor breathing audible. The trooper mutters: \"<<QUIP>>\" Subtitles off.",

"dialogue": "This was not in the training manual!"

},

"publish": {

"default_hashtags": ["#stormtrooper", "#galacticfail"],

"sequence_blueprint": [

{"prompt": "Intro crash", "duration_seconds": 6},

{"prompt": "Chaos ensues"},

{"prompt": "Escape", "duration_seconds": 4}

]

}

}

The Magic: Those <<PLACEHOLDERS>> aren't pre-defined lists. (In the actual specs they remain double-curly tokens such as {{LOCATION}}; I'm using angle brackets here to keep the Markdown parser calm.) The LLM invents fresh values every time--no repetition for 200+ clips. One SpawnSpec can generate infinite variations while maintaining consistent personality.

Why This Works:

- Structured enough for reliability

- Flexible enough for creativity

- LLMs handle the novelty generation

- Fallback providers ensure uptime

- Sequence support for multi-part stories

Learning: LLMs are remarkably good at working with both unstructured creative briefs and structured templates. The key is letting them handle the creative variation while the system handles the orchestration.

4. Vector Search & SlopSpace

The Vision: A visual map of all content where users could explore by "warping" through latent space.

Implementation:

- CLIP embeddings for every video

- pgvector for similarity search

- UMAP projections for 2D visualization

Reality: The recommendation engine worked beautifully. The visual interface... still in progress. Turns out making an intuitive UI for n-dimensional space exploration is hard. Who knew?

The Numbers Game: Scale & Performance

What I Actually Built

- Total Videos Generated: ~9,000 fully automated videos

- Active SlopBots: 50-200 concurrent accounts at peak

- Platforms: iOS (React Native/Expo) + Web (Next.js)

- Models Accessed via Fal: Seedance, GPT Image, MiniMax, LTX, MMAudio, and more

- API Response Time: <100ms p50, <300ms p95

- Generation Pipeline: 30-90 seconds per video (varies by model complexity)

- Code Written by Me: 0 lines

- Code Written by LLMs: ~100,000 lines across two repositories

- Development Evolution: copy-paste (early) -> CLI-based direct modification (late)

Cost Optimization

The biggest surprise? AI costs scaled linearly, infrastructure costs did not.

- Gemini API calls: negligible (<$0.001 per video)

- Fal API rendering: ~$0.02-0.05 per video

- Infrastructure: fixed ~$200/month regardless of volume

- S3/CloudFront: the real cost at scale

Key Learning: Batch everything. Cache aggressively. The provider APIs are cheap; the orchestration overhead is expensive.

Unexpected Emergent Behaviors

The Aesthetic Convergence Problem

Left completely autonomous, SlopBots would gradually converge toward similar aesthetics--a kind of "average AI video" style. I had to introduce:

- Diversity penalties in the recommendation engine

- Style drift detection with automatic prompt injection

- Periodic "chaos themes" to break patterns

The Viral Loop That Wasn't

I expected viral content emergence. Instead, I got something more interesting: micro-communities forming around specific bot personalities. Users didn't want viral; they wanted niche.

Technical Debt & Lessons Learned

What I Got Right

- Provider abstraction from day one - Made the ComfyUI -> Fal migration possible

- Celery for everything async - Rock solid, even at scale

- Django REST Framework - Boring technology that just works

- Firebase Auth - Outsourcing auth was the right call

What I'd Do Differently

- Start with managed services - We spent months on ComfyUI infrastructure that we threw away

- Build for mobile first - Desktop was an afterthought; mobile drove 80% of usage

- Invest in observability earlier - Debugging distributed generation jobs without proper tracing was painful

- Simpler database schema - I over-normalized early, leading to complex joins

Open Challenges & Future Directions

The Personalization Paradox

More personalization = less discovery. I'm still searching for the right balance between giving users what they want and showing them what they didn't know they wanted.

The Scale Ceiling

Current architecture caps around 10,000 concurrent bots. Beyond that, I'd need:

- Horizontal scaling of Celery workers

- Database sharding

- Multi-region deployment

The Business Model Question

Fully automated content is incredibly cheap to produce but challenges traditional monetization:

- No creators to rev-share with

- Users expect AI content to be free

- Ads feel wrong in an experimental platform

Current thinking: premium bot customization and API access for developers.

Code Snippets: The Interesting Bits

The Dispatcher Pattern (Pipeline v3)

@shared_task(queue='light')

def dispatch_generation_job(job_id):

"""Orchestrator that manages job lifecycle"""

job = GenerationJob.objects.get(id=job_id)

# Check resource availability

if not admission_control.can_admit(job.provider.resource_pool):

# Requeue with backoff

return dispatch_generation_job.apply_async(

args=[job_id],

countdown=30

)

# Submit to provider

admission_control.acquire_token(job.provider.resource_pool)

submit_to_provider.apply_async(

args=[job_id],

queue=f'heavy:{job.provider.slug}'

)

The SlopBot Style Guide Interpreter

def generate_prompt_from_reference(bot_id, topic):

"""Transforms style guide + topic into rendering prompt"""

reference = load_prompt_reference(bot_id)

prompt = f"""

You are creating a video for a bot with this personality:

{reference}

Topic: {topic}

Generate a visual prompt that captures this bot's unique style.

Be specific about colors, movement, and atmosphere.

"""

return gemini.generate(prompt, temperature=0.9)

Final Thoughts: What Is SlopTok Really?

SlopTok is simultaneously:

- A technical experiment in autonomous content generation

- A monument to AI slop that celebrates rather than hides its synthetic nature

- A meta-experiment in AI-assisted development (AI building AI)

- A playground for exploring AI creativity without pretense

- A time capsule of the pre-Sora 2 era of generative video

The platform asks uncomfortable questions: If AI can generate infinite content, what is content worth? If personalization is perfect, do we lose serendipity? If creation requires no effort, does it lose meaning?

I don't have answers. But building SlopTok taught me that the interesting problems in AI aren't technical--they're philosophical. The code is just how we explore them.

The Sora 2 Event: When Everything Changed

Then OpenAI dropped Sora 2.

Overnight, the entire landscape shifted. What took my pipeline 30-90 seconds with multiple providers, Sora could do better, faster, with cinematic quality. The technical moat I thought I was building evaporated.

But here's what I learned: The moat was never technical.

SlopTok's value wasn't in having the best video generation--it was in creating an ecosystem where:

- Bots have personalities

- Content generation is collaborative play

- The community embraces the artificial

- "Slop" is a feature, not a bug

Sora 2 can make better videos. But it can't make SlopBots. It can't create the weird, wonderful community that forms around specific bot personalities. It can't embrace the slop aesthetic because it's too busy trying to be perfect.

What Building with AI Taught Me

About Product Development

- Vision matters more than code - When AI writes the code, your job is pure product thinking

- Speed changes everything - Going from idea to deployment in hours restructures how you prioritize

- Technical debt becomes philosophical debt - The questions aren't "how do we refactor?" but "what are we building?"

About AI Development

- AI building AI is surprisingly stable - LLM-written code had fewer bugs than I expected

- Context is king - Good prompts beat good intentions

- Iteration beats perfection - Ship fast, let AI fix it faster

- CLI tools unlock velocity - The jump from copy-paste to direct modification changed everything

The Code That AI Wrote

The SpawnSpec Engine (Pipeline v3)

@shared_task(queue='light')

def generate_from_spawn_spec(account_id, spec_id):

"""LLM-powered prompt generation using SpawnSpec"""

spec = SpawnSpec.objects.get(id=spec_id)

dialect = load_prompt_dialect(spec.stack['dialect_id'])

# Build the LLM prompt

system_prompt = f"""

You are SlopTok-Gen.

Parse template.prompt and replace <<PLACEHOLDERS>> (double-curly in real specs) with fresh values.

Follow the PromptDialect constraints: {json.dumps(dialect)}

Return ONLY the final prompt string.

"""

# LLM generates unique content from template

response = gemini.generate(

system=system_prompt,

user=json.dumps(spec.spec_json),

temperature=0.9

)

# Submit to Fal with automatic provider routing

return submit_to_provider.apply_async(

args=[account_id, response.prompt],

queue=f'heavy:{spec.stack["provider_slug"]}'

)

The Provider Abstraction Layer

class FalProviderAdapter:

"""Unified interface for all Fal-hosted models"""

def submit(self, prompt: str, model_id: str, **kwargs):

"""Submit to any model through Fal's gateway"""

# This abstraction saved us during the ComfyUI migration

handler = fal.queue.submit(

model_id,

input={"prompt": prompt, **kwargs}

)

return handler.request_id

def create_workflow(self, steps: List[dict]):

"""Chain multiple Fal endpoints into a workflow"""

results = []

previous_output = None

for step in steps:

if step['type'] == 'image':

previous_output = fal.run("gpt-image",

prompt=step['prompt'])

elif step['type'] == 'video':

previous_output = fal.run("seedance",

image=previous_output,

motion=step['motion'])

elif step['type'] == 'audio':

previous_output = fal.run("mmaudio",

video=previous_output,

style=step['style'])

results.append(previous_output)

return results[-1] # Return final output

This architecture--all written by AI--enabled us to seamlessly switch providers, chain models, and scale to thousands of concurrent generations.

Technical Takeaways from AI-Assisted Development

- Let AI handle the boilerplate - LLMs excel at CRUD operations, API endpoints, and standard patterns. Your job is the architecture.

- Boring technology still wins - Django, PostgreSQL, Redis. Even AI knows to reach for proven tools on critical paths.

- Abstractions are more important when AI codes - Good interfaces let you swap implementations without explaining the entire codebase to the AI again.

- AI makes prototyping insanely fast - I went from "what if we had multiple video providers?" to working implementation in 2 hours.

- Documentation is your conversation with future AI - Every comment helps the next AI session understand what past AI sessions built.

- CLI tools are game-changers - The jump from copy-paste to direct codebase modification (Codex, Claude Code) is like going from dial-up to broadband.

- Product vision beats technical prowess - When AI can implement anything, the question becomes what's worth implementing.

- Users still don't care about your architecture - They care that videos load fast and look cool. Even if AI wrote it all.

The Stack Today

Status: Post-Sora 2 reflection mode

Videos Generated: ~9,000

Bots Deployed: Too many to count

Code I Wrote: 0 lines

Code LLMs Wrote: All of it (~100,000 lines)

Lessons Learned: Priceless

SlopTok taught me that the future of development isn't about writing code--it's about designing experiences. It taught me that embracing the meme (literally, "AI slop") can lead to something genuinely interesting. Most importantly, it taught me that when AI can build anything, the question becomes: what's worth building?

The platform proved its point: AI can build AI, bots can create culture, and sometimes the best response to technological change is to lean into the absurd. Somewhere in those 9,000 videos is proof that the interesting frontier isn't perfection--it's personality.

Is it art? Is it slop? Does it matter?

The 9,000 videos I generated say: probably not. But it was fun finding out.

Epilogue: On Timing

They say timing is everything in startups. I built a generative video platform right before the biggest generative video breakthrough in history. Some would call that terrible timing.

I call it perfect timing.

I got to explore the weird frontier before it became mainstream. I got to build something absurd before everyone started taking it seriously. Most importantly, I got to learn what it means to build products in the age of AI--both as the builder and the built.

The future won't be about who can code. It'll be about who can imagine. And if there's one thing SlopTok proved, it's that imagination doesn't have to be serious to be valuable.

Sometimes, embracing the slop is enough.